Overview

Social media is a double-edged sword. While it connects us, it also spreads misinformation at lightning speed. X (formerly Twitter) introduced Community Notes as a way to fight this issue—giving users the power to add context to misleading posts. But does it actually work? The effectiveness of this system from a user perspective remains underexplored. This project dives into how people interact with Community Notes, what makes them trust (or distrust) them, and how the feature could be improved.

If you'd like to read more

Challenge

Misinformation isn’t just annoying—it has real consequences.

Take Hurricane Beryl, for example. A completely accurate weather forecast was mistakenly flagged as misleading, causing confusion at a critical moment. Or consider the Paris 2024 Olympics, where an Algerian boxer became the target of false claims about her gender. High-profile figures, including Elon Musk, Donald Trump, and J.K. Rowling, amplified misleading statements before Community Notes even stepped in. By the time it did, the damage was done.

These incidents raise an important question: Is Community Notes actually helping users distinguish between fact and fiction? While the tool allows users to crowdsource fact-checking, its real-world impact remains unclear. That’s where this research comes in.

Research Goals

I set out to investigate how well Community Notes works in helping users navigate misinformation. Specifically, I wanted to know:

Why do people create Community Notes? Do they align with X’s goal of fighting misinformation, or are people using them for other reasons?

How do users decide which notes are helpful? What factors influence their ratings?

What can be improved? How can X make Community Notes more reliable and effective?

To answer these, I conducted a mixed-methods study, combining data analysis of Community Notes with real user research.

Process

Secondary Data Analysis

I conducted thematic analysis of 300 Community Notes from X’s public dataset, categorising them based on their purpose. Some interesting trends emerged:

Most notes do address misinformation, but not always in the way X intends.

Some notes just add extra details rather than debunking false claims.

Others are totally off-track, expressing personal opinions or even joking about the post.

Clearly, there’s confusion about what Community Notes are actually for.

Primary User Research

To understand how users perceive and rate Community Notes, I ran a qualitative study with 16 frequent X users.

Participants reviewed real Community Notes, rated them, and explained their reasoning. I uncovered some key patterns:

Users value credible sources. Notes citing reputable news outlets or government websites were trusted more than those using Wikipedia or random blog posts.

Clarity matters. If a note was too wordy, vague, or poorly written, users found it less helpful—even if the information was accurate.

Bias and groupthink play a role. Many users admitted that they were influenced by how others had already rated a note, leading to conformity bias.

One particularly eye-opening moment came when users rated a note contradicting a popular news outlet as "not helpful"—not because the note was inaccurate, but because they instinctively trusted the original post. This showed that credibility isn't just about facts; it's about perception.

Findings

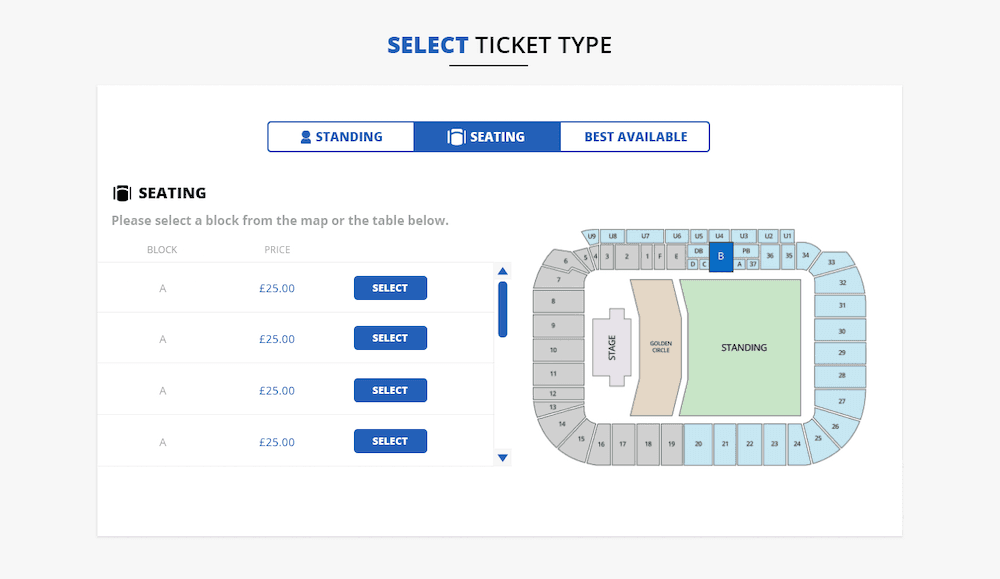

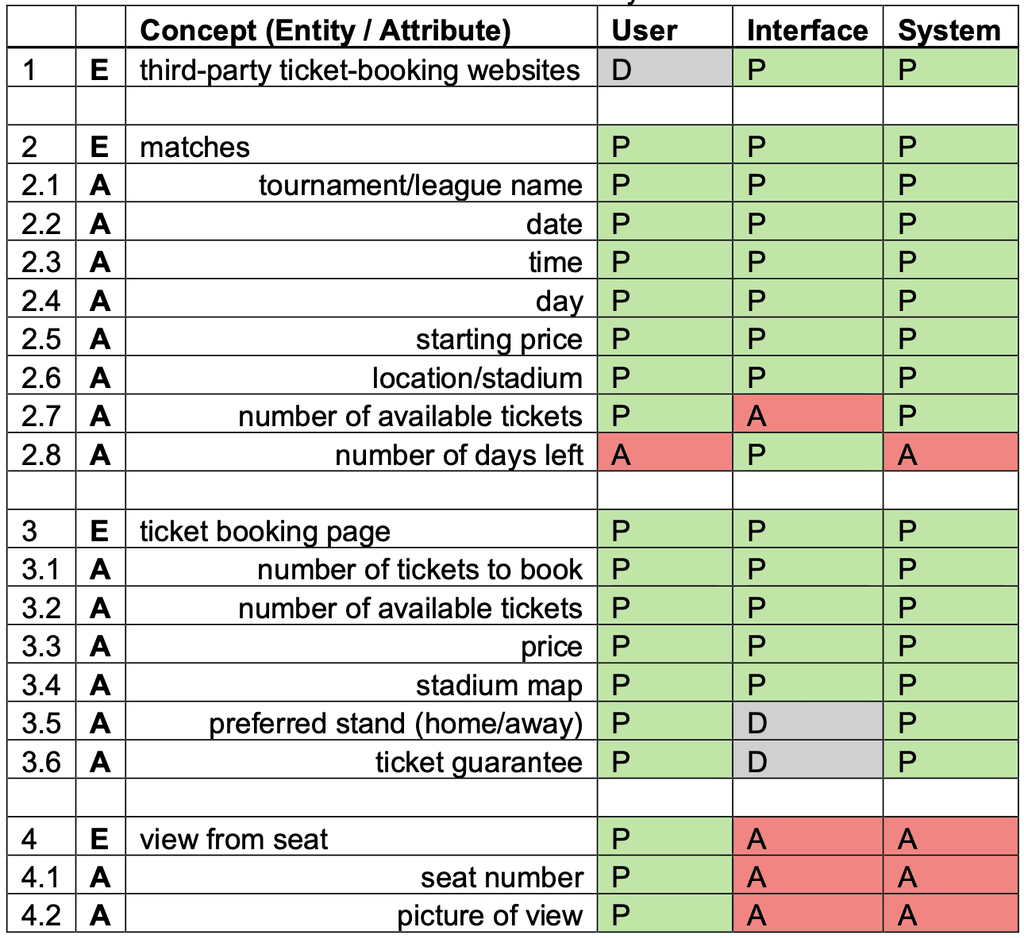

Match Ticket Booking: Surface Misfits

Ticket Guarantees

The unclear representation of ticket guarantees on third-party sites led participants to hesitate or abandon the booking process. One user remarked they would avoid purchasing tickets without visible guarantees, further highlighting the importance of clear assurances.

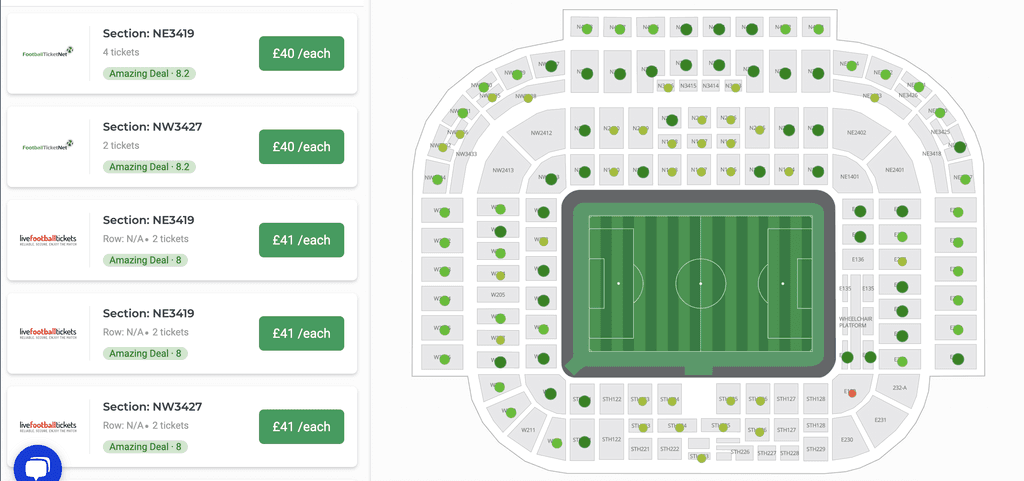

Seat View

Participants highly valued the ability to view the pitch from their seats, a concept absent from the booking interface. Two users relied on third-party websites to visualize their seat view, reflecting the system's failure to meet a critical user need.

Away Stand Confusion

Fans struggled to locate the away stand in the stadium map. Participants expected this feature to be clearly marked but found it hidden in a poorly labeled legend. This mismatch between their expectations and the system's interface led to frustration and, in one case, caused the user to book tickets for an alternative game.

Accommodation Booking: Situated Action and Shared Understanding

Misleading Filters

Users applied filters for price and location, expecting relevant results. However, participants frequently encountered irrelevant options, leading to confusion and extended time spent searching. One participant abandoned the process entirely after multiple failed attempts to find suitable accommodation.

Expectation Mismatches

Participants expected features like “city views” to match the imagery presented on hotel pages, but this often led to confusion when reality differed from system cues. In one instance, a participant changed their plans due to inaccurate cues, ultimately booking a different accommodation.

Pricing and Availability Issues

Hidden pricing details, particularly room availability for multiple nights, caused frustration. One user struggled to understand whether they could book for two consecutive nights, resulting in task abandonment.

Based on the findings, I proposed the following improvements to enhance user experience for both match ticket booking and accommodation systems:

Improve Information Clarity

Clearly label critical information, such as the away stand location, seating views, and ticket guarantees. Place this information where users expect it to reduce confusion.

Preview Features

Add features that allow users to preview seat views and hotel room views, empowering them to make more informed decisions without needing external resources.

Enhance Filter Functionality

Ensure filters work accurately and consistently to display results that match user expectations. Make pricing, availability, and room options clear upfront.

Trust-Building Features

Prominently display guarantees and assurances during the booking process to increase user confidence and reduce hesitation in completing purchases.

Solution and Impact

The research highlighted critical usability issues that hindered users' ability to book tickets and accommodations smoothly. By addressing these surface misfits and aligning system features with user expectations, I designed a solution that prioritises clarity, trust, and ease of use. The recommendations provide actionable steps for improving digital systems in the travel and sports booking domain, ensuring that users can navigate these processes with greater confidence and efficiency.